Webcam Fetcher for Screensavers

While cleaning up an old network-attached storage (NAS) box at home the other day, I found the remnants of an old, webcam-scraping project I built when we first moved out to California. California was an interesting place for us, but we often felt like we were out of sync with the world because all of our friends and family lived back on the East coast. One day while looking through the screensaver options on my desktop I started thinking about pictures I could plug into it to remind me of home. I then had an interesting idea: why not write a simple script to periodically download pictures from public webcams, and then route them into the screensaver? These webcams could provide a simple, passive portal by which we could keeps tabs on the places we used to know.

It didn't take long to write a simple Perl script that scraped a few webcams and save the images to my screensaver's picture directory. As I started adding more webcams I found I needed to do more sophisticated things with the script. After the project stabilized I migrated it over to a low-power NAS, which allowed the scraper to run at more reliable intervals. Eventually I retired the project, because the never-ending updates from around the world were just too distracting.

Building the Webcam Scraper

The first version of my webcam scraper used a simple Perl script to retrieve images from different webcams I'd found on the web. This work wasn't difficult- many of the webcams I looked at simply referenced their most recent picture with a static URL. All I had to do was store a list of URLs I wanted in a text file and then use the Perl script to download each URL and datestamp its image. It didn't take long before I found a few webcams that used URLs that changed over time. I updated my script so it would parse the html page the images lived in and retrieve the image. In most cases this could be done by simply extracting the n-th image url in the page. The system worked well and I built up a good list of webcams I could use.

The next trick was adjusting how frequently the scraper grabbed data. Webcams update at different intervals and are often offline (or boring) at night in the webcam's timezone. I added some timing interval info to my webcam list to control how frequently to grab (hourly, daily, weekly), as well as some hooks to set the hours of operation for the grabs. I also compared a grab to its previous result in order to do simple deduping. If a webcam returned too many duplicates in a row, or no data at all, the script marked it as inactive so I wouldn't pound their server over and over.

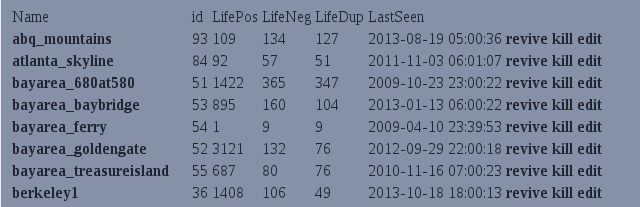

By this point the list of webcams was getting long and becoming difficult to manage from the command line. I rewrote the script to externalize all of the URL data and statistics into a SQLite database. This database enabled me to keep better statistics on each webcam, which in turn let me make more rational estimates about whether a camera was out of commission or not. The database also gave me an easy way to throw a simple GUI on top of it. All I had to do was write a Perl CGI script to take user input and feed it into the database. Editing the settings in a web page was a huge improvement over text files.

Running on a NAS

I originally ran the scraper on my desktop, but it didn't have very consistent results because I only powered up the desktop when I needed it. I happened to have a Buffalo Linkstation NAS, which I'd read ran Linux on an ARM processor. After reading a lot of webpages, I found that others had found a security vulnerability in the Linkstation's software, and had written a tool that let you exploit it to get a root account (!!). I ran the script, got root, and installed some missing binaries to make the box more usable. I had to cross compile some tools from source since the Linkstation uses an ARM, but the open NAS community did a good job of spelling out everything you needed to do.

I didn't have to do much to get the project running on the NAS. I had to install some Perl packages and SQLite libraries, but the scraper worked without any major changes. I created a cron job to run the scraper once an hour and had it dump data to a directory that was exported. The Linkstation already had a web server on it, so all I had to do to install my web-based editor was copy the CGI script into the existing webserver directory. The nice thing about running on the NAS was that the hardware only ran at about 7W, so I didn't feel bad about leaving it on for long periods of time. Before there was the cloud for launch-and-forget apps, I had the NAS box.

Routing to a Screen Saver

Getting pictures into a Linux screensaver was more annoying than I thought it would be. GNOME's default screensaver was very particular about where you were supposed to put pictures. It wasn't a big deal when I ran the scraper on my desktop, but when I started using the NAS, I didn't have a way to point GNOME at the NAS's mount point. The solution was to ditch GNOME's screensaver and start using XScreenSaver. GNOME didn't make it easy to switch, but XScreenSaver gave me all the options I needed for controlling where it should get pictures. The scraper stored each webcam's pictures in its own directory, and then merged the latest results into a separate directory that XScreenSaver could use.

Statistics

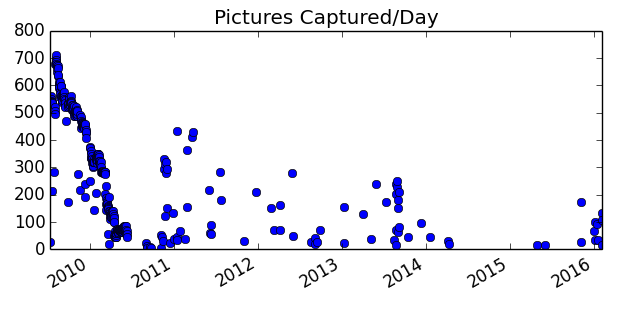

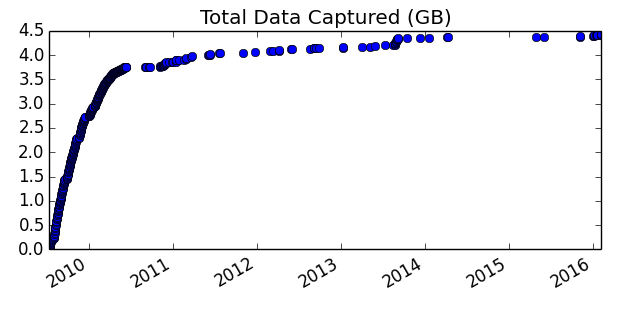

The plot below shows how many pictures I was grabbing a day. This data is interesting to me because it shows two things. First, the dots get more sparse as time goes on because I changed how often I powered up the NAS. Initially I had it running all the time, but then I started running it only when I needed it. Eventually I stopped using it altogether, except when I wanted to do backups. The second point from these plots is that you can see how webcams die off over time. I didn't update the webcam list very often (maybe once a year at best). Webcams are often short lived, unfortunately, Thus, the number of images dropped off over time as different cams died.

In terms of data, I grabbed from 60 different webcams and accumulated 107K pictures. The pictures amount ot about 4.5GB of data, which means the average picture was about 42KB. The majority of the data came in the first year of use.

Ending

The webcam project was fun but addictive. Any time the screensaver kicked on I found myself watching it like TV, wondering what the next webcam would look like. I worked on a few other features before I moved on. One was the ability to grab short video streams from MJPEG webcams. It wasn't hard to grab these video streams, but I didn't have a way to display them in the screensaver.

I also learned a good bit about security and privacy during this project. While searching for new sources, I found that a lot of people were installing webcams without realizing they were accessible from anywhere in the world. You can find tons of open IP webcams on Google through "inurl" searches. Most of these were traffic cams or convenience stores, but I also saw some home cameras that were in the open (e.g., pet cams for people to check up their pets during the day). This hits on a big problem with consumer security these days- how do you know your devices aren't doing something you'd be upset to hear about? Better home gateways would be a start. ISP analysis would be even better, assuming people didn't freak out about a third party is monitoring their connections.