|

|

| Craig Ulmer |

Extending Composable Data Services into SmartNICsIn my SmartNICs project we've been busy building examples of how collections of SmartNICs in an HPC platform can work together to implement data services that are useful to HPC workflows. In the Fall we built a distributed, particle-sifting example that reorganizes simulation results into a form that's easier for downstream applications to consume. We used Faodel to control the distribution of data between collections of SmartNICs and Apache Arrow to reorganize the data. Thanks to Sandia's Glinda cluster, this is the first time we've had the opportunity to run SmartNIC experiments at the scale of 100 cards.   AbstractAdvanced scientific-computing workflows rely on composable data services to migrate data between simulation and analysis jobs that run in parallel on high-performance computing (HPC) platforms. Unfortunately, these services consume compute-node memory and processing resources that could otherwise be used to complete the workflow's tasks. The emergence of programmable network interface cards, or SmartNICs, presents an opportunity to host data services in an isolated space within a compute node that does not impact host resources. In this paper we explore extending data services into SmartNICs and describe a software stack for services that uses Faodel and Apache Arrow. To illustrate how this stack operates, we present a case study that implements a distributed, particle-sifting service for reorganizing simulation results. Performance experiments from a 100-node cluster equipped with 100Gb/s BlueField-2 SmartNICs indicate that current SmartNICs can perform useful data management tasks, albeit at a lower throughput than hosts. Best Paper AwardAt the conference they gave us an award for best paper. I really enjoyed meeting the other CompSYS folks, and had good, friendly discussions with a number of people.  Publication

Presentation

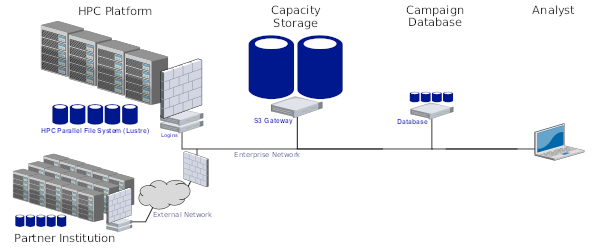

| Accessing Institutional S3 Object StorageOne of the challenges of working with large, long-term datasets is that you need to think about how to host the data in a way that meets FAIR principles (ie, data should be Findable, Accessible, Interoperable, and Reusable). While the simplest solution is just to leave data on an HPC platform, capability storage is expensive and typically isolated in a way that forces users to come to the platform to do their work. Realizing that what researchers need is large capacity storage on the enterprise network, Sandia's Institution Computing effort procured two large S3 storage appliances last year and opened up use for friendly users.  This year I worked in an ASC project that looked into how we could better leverage S3 stores in our ModSim work. We experimented with the AWS SDK for C++ and created exemplars for exchanging data with a store. We then benchmarked S3 performance for three different stores: the new institutional appliance, a previous S3 system used for devops, and an in-platform S3 store built on top of a 16-node, NVMe-equipped Ceph cluster. While S3 performance is low compared to HPC file systems, we found there is an opportunity to decompose our ModSim data into smaller fragments that would allow desktop users on the enterprise network to fetch just the parts of the data they need. AbstractRecent efforts at Sandia such as DataSEA are creating search engines that enable analysts to query the institution's massive archive of simulation and experiment data. The benefit of this work is that analysts will be able to retrieve all historical information about a system component that the institution has amassed over the years and make better-informed decisions in current work. As DataSEA gains momentum, it faces multiple technical challenges relating to capacity storage. From a raw capacity perspective, data producers will rapidly overwhelm the system with massive amounts of data. From an accessibility perspective, analysts will expect to be able to retrieve any portion of the bulk data, from any system on the enterprise network. Sandia's Institutional Computing is mitigating storage problems at the enterprise level by procuring new capacity storage systems that can be accessed from anywhere on the enterprise network. These systems use the simple storage service, or S3, API for data transfers. While S3 uses objects instead of files, users can access it from their desktops or Sandia's high-performance computing (HPC) platforms. S3 is particularly well suited for bulk storage in DataSEA, as datasets can be decomposed into object that can be referenced and retrieved individually, as needed by an analyst. In this report we describe our experiences working with S3 storage and provide information about how developers can leverage Sandia's current systems. We present performance results from two sets of experiments. First, we measure S3 throughput when exchanging data between four different HPC platforms and two different enterprise S3 storage systems on the Sandia Restricted Network (SRN). Second, we measure the performance of S3 when communicating with a custom-built Ceph storage system that was constructed from HPC components. Overall, while S3 storage is significantly slower than traditional HPC storage, it provides significant accessibility benefits that will be valuable for archiving and exploiting historical data. There are multiple opportunities that arise from this work, including enhancing DataSEA to leverage S3 for bulk storage and adding native S3 support to Sandia's IOSS library. Publication

| Processing Particle Data Flows with SmartNICsIn my SmartNICs project I've been working with US Santa Cruz on new software that makes it easier to process particle data streams as they flow through the network. We've been using Apache Arrow as a way to do a lot of the heavy lifting, because Arrow provides an easy-to-use tabular data representation and has excellent serialization, query, and compute functions. For this HPEC paper we converted three particle datasets to an Arrow representation and then measured how quickly Arrow could split data into smaller tables for a log-structured merge (LSM) tree implementation we're developing. Jianshen then dug into getting the BlueField-2's compression hardware to accelerate the unpacking/packing of data with a library he developed named Bitar. After HPEC we wrote an extended version of this paper for ArXiv that includes some additional plots that had previously been cut due to page limits.  The datasets for this were pretty fun. I pulled and converted particle data from CERN's TrackML Particle Identification challenge, airplane positions from the Opensky Network, and ship positions from NOAA and Marine Cadastre. One of the benefits of working with Arrow is that it let us use existing tools to do a lot of the data. I just used Pandas to read the initial data, restructure it, and save it out to compact parquet files that our tests could quickly load at runtime. Even though each dataset had a varying number of columns, our Arrow code could process each one so long as the position and ID columns had the proper labels. AbstractMany distributed applications implement complex data flows and need a flexible mechanism for routing data between producers and consumers. Recent advances in programmable network interface cards, or SmartNICs, represent an opportunity to offload data-flow tasks into the network fabric, thereby freeing the hosts to perform other work. System architects in this space face multiple questions about the best way to leverage SmartNICs as processing elements in data flows. In this paper, we advocate the use of Apache Arrow as a foundation for implementing data- flow tasks on SmartNICs. We report on our experiences adapting a partitioning algorithm for particle data to Apache Arrow and measure the on-card processing performance for the BlueField-2 SmartNIC. Our experiments confirm that the BlueField-2's (de)compression hardware can have a significant impact on in- transit workflows where data must be unpacked, processed, and repacked. Publication

| Employee Recognition Award for Globus Work2022-05-17 networks

I won an an individual Employee Recognition Award (ERA) for some work that I've been doing with Globus. At the award ceremony today I got to shake hands with the lab president and several VPs. Here's the ceremonial coin they gave me:  GlobusFor the last few years I've worked as a "data czar" in a few, large multi-lab research projects. One of the problems with being the data czar is that you often need to find a way to move large amounts of data between partner labs. While some of the open science labs have good mechanisms for exchanging data, most labs have strict access controls on data egress/ingress via the Internet. As a result, it isn't uncommon for researchers just bring hard drives with them when they have in-person meetings, so they can physically hand datasets over to their colleagues. It's a clunky way to collaborate. About 20 years ago, Grid Computing people solved this problem by setting up special data transfer nodes (DTNs) at the edge of the network that have special software for maximizing throughput on large (many TBs) transfers. They eventually spun the technology off as a company named Globus. Globus acts as a third party that users could access to coordinate transfers between different DTNs. While the free tier of sevice is sufficient for basic use, Globus makes money by selling enterprise subscriptions that have features that most users would want (encryption, throttling, extra security, etc). Sandia didn't have a Globus DTN, but our networking people were interested in seeing how well it could leverage our new 100Gbps site infrastructure. With the help of a few smart people, we worked through the lengthy approval process, stood up a DTN with 70TB of storage, and worked through more approval processes to connect to a few trial labs. While the initial transfers across the US were much lower than the raw link speed due to out HDDs, we were able to pull a few TBs of data in no time. Shortly after we let people know of our success, we heard from other projects where they needed to transfer TBs of non-sensitive data to other locations. I didn't know about the ERA submission until it was already in flight- I would have asked for it to be done as a team award, since other people did the parts that were tough. | Pattern-of-Life Activity Recognition in Seismic DataA collaborator in one of my research projects included me on a clustering paper that he had accepted in Applied Artificial Intelligence. Erick was interested in developing new algorithms that could help characterize different activities taking place in seismic data. As a data engineer in the project, I gathered data and did a lot of tedious, manual inspection to extract ground truth the team could use to train their algorithms. You can tell I had a hand in making labels, given the descriptive category "workers unroll white thing".  AbstractPattern-of-life analysis models the observable activities associated with a particular entity or location over time. Automatically finding and separating these activities from noise and other background activity presents a technical challenge for a variety of data types and sources. This paper investigates a framework for finding and separating a variety of vehicle activities recorded using seismic sensors situated around a construction site. Our approach breaks the seismic waveform into segments, preprocesses them, and extracts features from each. We then apply feature scaling and dimensionality reduction algorithms before clustering and visualizing the data. Results suggest that the approach effectively separates the use of certain vehicle types and reveals interesting distributions in the data. Our reliance on unsupervised machine learning algorithms suggests that the approach can generalize to other data sources and monitoring contexts. We conclude by discussing limitations and future work. Publication

|