|

|

| Craig Ulmer |

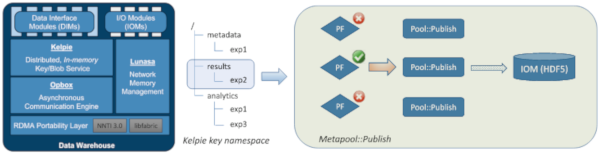

Mediating Data Center Storage DiversityPatrick Widener put together a paper on using FAODEL to deal with data center storage diversity for ISC 2019. This paper gets into some of the ideas we've had about how to use data services to route to different storage targets, and highlights some of the HDF5/LevelDB interfacing that Patrick's done.  AbstractComposition of computational science applications into both ad hoc pipelines for analysis of collected or generated data and into well-defined and repeatable workflows is becoming increasingly popular. Meanwhile, dedicated high performance computing storage environments are rapidly becoming more diverse, with both significant amounts of non-volatile memory storage and mature parallel file systems available. At the same time, computational science codes are being coupled to data analysis tools which are not filesystem-oriented. In this paper, we describe how the FAODEL data management service can expose different available data storage options and mediate among them in both application- and FAODEL-directed ways. These capabilities allow applications to exploit their knowledge of the different types of data they may exchange during a workflow execution, and also provide FAODEL with mechanisms to proactively tune data storage behavior when appropriate. We describe the implementation of these capabilities in FAODEL and how they are used by applications, and present preliminary performance results demonstrating the potential benefits of our approach. Publications

Presentations

| Revisiting RoCE on 100GigEA few years ago we stood up the Carnac cluster so our Emulytics users would have a place to do large-scale virtual network experiments. Unlike our HPC clusters which use InfiniBand or OmniPath, Carnac was built around a 100GigE network fabric based on Mellanox NICs and a large Arista switch. While the 100GigE network was much more expensive than InfiniBand, it provides a more natural conduit for Emulytics experiments. Given that we knew portions of Carnac would be idle between jobs, we wondered if we could borrow some of the nodes from time to time and run MPI jobs efficiently. Our initial tests using MPI over TCP were abysmal as expected. TCP's latencies were pretty bad and we found you really had to open up a lot of simultaneous connections to get anywhere near the available bandwidth. About a decade ago there was a lot of interest in using RDMA over Converged Ethernet (RoCE) to improve this performance. RoCE is interesting because it tries to get Ethernet hardware to behave more like HPC hardware. On the host side, RoCE provides an InfiniBand API that HPC comm libs are used to using. RoCE NIC vendors have written OS bypass libs that allow userspace applications to directly talk with message queues on the NIC. In the fabric, RoCE messages are marked in special Ethernet frames so RoCE-aware switches can handle them more efficiently (ie, use link-level flow control to avoid drops).  RoCE has been our there for a while, but you don't see many people using it much these days. Joe Kenny set about trying to configure our switches and NIC to use it. At first it seemed like it was working, but in longer experiments he saw enough lock ups to indicate that there were incompatibilities in the hardware. He pleaded for help in an OFED talk but found no solutions. He borrowed a switch from Mellanox but got nowhere. Just as we were about to call it dead, he stumbled into some settings that fixed things. Things also worked fine back on our core Arista switch. It's frustrating that we don't have a good explanation for why things did/didn't work, but I pushed Joe to document what we went through in a SAND Report. It's SAND2019-13444. AbstractRemote Direct Memory Access (RDMA) over Converged Ethernet (RoCE) has the potential to provide performance that rivals traditional high performance fabrics. If this potential proves out, significant impacts on system procurement decisions could follow. This work provides a series of small scale performance results which are used to compare and contrast the performance of RoCE-enabled Ethernet with TCP-based Ethernet and an HPC network. Additionally, a discussion of the maturity of RoCE firmware/software stacks and documentation is provided along with useful approaches for probing performance. A detailed description of two experimental setups known to have good RoCE performance is given, including step-by-step configuration and the exact hardware and software revisions employed. At small scales, RoCE is found to have significant performance advantages over "out-of-the-box" TCP protocols and is competitive with state-of-the-art high performance networks. Further examination of RoCE using a wider array of benchmarks and at greater scale is warranted. Publication

| 100GigE Packet CaptureThis summer we were fortunate to have two, undergraduate summer interns come in to help us out with different projects related to the clusters. The first intern to arrive was Haoda Wang from USC. Haoda had a good bit of experience with Linux systems so we had him help us do some experiments with some nodes we just bought to do 100Gb/s packet recording. The nodes each have two AMD Epyc processors, 1TB of RAM, 10x2TB of U.2 NVMe storage, and a 100Gb/s Mellabox VPI NIC. He did a nice write up of the work he did in SAND2019-10319.  The Epyc nodes had a few new features so the first thing we had Haoda do after setting up the hardware was run some benchmarks to get a better idea of how the system should be configured. He tried a few different OSs and hardware configs. One interesting observation was that the system was slightly faster when only half the memory sockets were filled (AMD docs had warnings about this). New Ubuntu kernels had slightly better performance in some benchmarks, but we were stuck with RHEL due to driver issues with Mellanox. The U.2 storage performed very well. Haoda found that the drives were very fast and that we could get close to 20GB/s of streaming write performance by using Btrfs or XFS raids. Interestingly, ZFS didn't perform very well, possibly due to its complexity and the speed of the drives. Haoda also explored using SPDK to stream data to disk via kernel bypass, but given that we only needed to hit 12GB/s speeds for worst-case network capture, we decided to stick with plain i/o. Haoda spent the rest of the summer fighting Mellanox drivers and tweaking settings to get the Ethernet NICs to run at 100Gbps speeds. After a great deal of searching he realized that while the userspace DPDK library could grab packets from the wire correcly, running the data through libpcap caused multiple memory copies in order to format the data for output. Writing the raw packets to disk and then reading them in a follower application made it possible to keep up with line rates. It was a frustrating journey, but I'm glad we figured it out. Publication

| FAODEL 1.1906.1 ReleasedOne of the things that's been missing from FAODEL is a tool to help manage resources and launch services. After the EMPIRE release, we did a lot of work to fix this by building a new cli tool that does many different things. The faodel tool can start/stop services, set/remove DirMan resource info, and put/get Kelpie objects from resource pools. We've received approval from DOE to release this as version 1.1906.1 (Excelsior!) at https://github.com/faodel/faodel. Here's the changelog: Release Improvements

Significant User-Visible Changes:

Known Issues

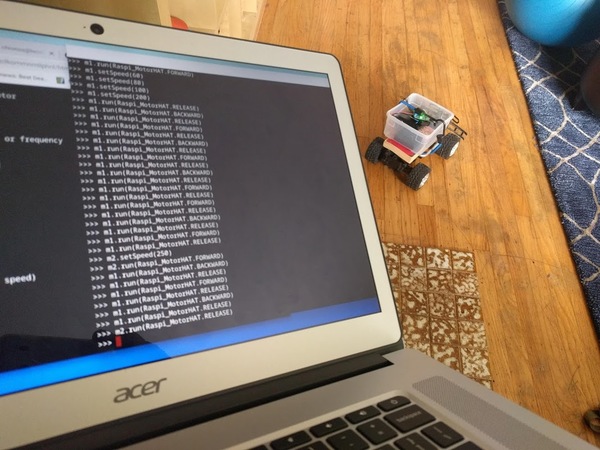

| Rolling PlagueSeveral years ago I bought a broken remote-control truck toy for a buck at a school fund raiser. I knew I wouldn't get the RF part working again, but the chassis had wheels and motors that looked like they were in good condition. I took the thing apart and verified all I had to do was power the wheels in the back to get them spinning, and apply pos/neg voltages on the wheels in front to get them to turn in either direction. I bought some L293D chips so I could flip directions on the motors and had plans to hook it up to an Intel Edison. The project fizzled out though because the Edison didn't have very good support and it was a pain to connect up your own parts to it.  A few weeks ago I got interested in I/O with the Raspberry Pi and decided I should revisit the RC car project. Rather than breadboard it up, I decided I should just buy a Motor Hat board for the car and be done with it. This morning I finally got the whole thing together- I'm calling it Rolling Plague, since I wound up using a copy of Camus's The Plague to lift the controller off the wheels. Plus, everything is absurd. Parts ListIt took a while to pick out the right parts for this project. The main things were:

Hooking things UpThe biggest pain in this project was soldering the pins onto the Pi Zero board. I've never been great at soldering and the pitch is small enough that I had to get a magnifying glass out to do the soldering given my old eyes. I continuously felt like I was ruining the board. However, the board booted when I applied power, and the power/ground pins at least seemed to work ok. For this project all I really needed was the I2C pins to work, so I didn't test out all the gpio pins. I'm pretty sure this will be the last time I ever solder headers on though. If the WH version had been available. I would have gone with that.  I booted the Pi up with the stock Raspbian Stretch Lite image and used a TV/keyboard to setup the OS. I used raspi-config to set the keyboard to US, connect to my wireless router, enable sshd, and enable I2C drivers. The motor hat's sample code needed smbus to run (I may have pip installed it, but you can apt-get python3-smbus). Once ssh was running, I detached the Pi from the TV and switched to using a chromebook to connect to it via ssh. The Zero was sluggish at times, but its python was good enough to issue io commands. The wiring for the board was pretty easy to hook up. I plugged the 5V batter lines into the HAT and then ran the DC motor lines into M1 and M2 on the board. I also hooked up a servo and tested it out with the example programs. Their library did all of the work of setting up the controller over the I2C. All I needed to do was issue some python commands on the Pi to get it to turn left and right. I did the same things with the DC motors. Basically you set the speed of a DC motor (0-255) and then send a direction command to tell it which direction to spin (forward, reverse, or disengage). The chipset sends a PCM sequence that throttles how much voltage is seen by the motor (averaged over time). It was pretty thrilling to see the back motors spin up and go. They needed a value of 30 or so to get going. Back and ForthI didn't want the electronics to get smashed so I put them in a small plastic bin that I could strap to the chassis. The bin didn't quite clear the wheels, so I grabbed a paperback version of The Plague and stuck it between the chassis and the bin. The whole thing is strapped together with a shock cord. I set the back motor to the minimum power setting and told it to go forward. It immediately went backwards (I hadn't bothered to figure out polarity) and ran into something. I flipped the wires around and issued a few commands to go forwards and backwards. The extra weight of the batteries (and Camus) meant I had to provide a higher value (around 40) to the monitors to get the car going. Similarly, the front wheels didn't turn very sharply, even when using the top value (255). I'll probably need to increase the battery voltage to get it to work a little better.  Driving the car was pretty clunky, largely because I had to issue multiple commands to get each motor to change state (ie, set speed and then enable/disable the motor). My kids started driving their RC cars around me and there was no hope of me keeping up. I'll need to come back later and write some functions to simplify the driving. NamingIn retrospect, Camus's book seems like an appropriate choice for this project. It's pretty ridiculous to put all this effort (and money) into building an RC car that's nowhere near as usable as a $10 car from a toy store. Still, it's important to keep doing the things you do, no matter how absurd they are. |