|

|

| Craig Ulmer |

Carnac in the HPC Annual ReportThis year we stood up the Carnac cluster to serve as an institutional platform for hosting large-scale Emulytics experiments. Carnac has 288 compute nodes that are connected by a 100GigE Arista core switch. This system is rather unique in that it features a reservation system that allows users to schedule bare metal jobs (eg, you can request 100 bare metal nodes be allocated next week for two days and provisioned with a customized OS). We were asked to put the following blurb together for the Sandia HPC Annual Report.  News Article

| FAODEL 1.1811.1 ReleasedNow that the EMPIRE review is done, we've packaged up all our changes and made a new release of the software (DIO). We received permission from both Sandia and DOE to make these updates available to the rest of the work. You can see this branch at https://github.com/faodel/faodel. Here's the changelog: Release Improvements

Significant User Visible Changes:

Known Issues

UpdateDuring the process of getting FAODEL integrated into Spack, there were some minor bug fixes pushed out in a 1.1811.2 release. | EMPIRE I/O EvaluationThis year in my I/O project we've been part of an ASC Level 2 milestone to evaluate the readiness of the EMPIRE simulation code that's being developed at Sandia. One mode of EMPIRE uses particle-in-cell methods to simulate plasma environments under different conditions. In a nutshell, the simulation tracks hundreds of millions of particles as they move through a mesh in order to observe their electromagnetic effects. My part of this work has focused on making sure I/O is performant, and that the simulator will be able to checkpoint data (particles and mesh variables) without killing simulation performance. Since existing IO libraries were used for field data, the bulk of this work has focused on using FAODEL to route raw, intermediate state to disk and reloading it.  After a considerable amount of work, we wrote hooks to have EMPIRE write particle/field data out to FAODEL's API. Our code packed data into Lunasa data objects and then published the objects out to a pool. For simplicity we used local pools that wrote to disk, but in later tests we also wrote to distributed hash tables. We ran a large number of tests on both the KNL and Haswell partitions of our Cray XC40 platform, and compared writes to both Lustre and the DataWarp Burst Buffer. FAODEL provided I/O speedups on both types of storage because it helped streamline our I/O. Interestingly, we noticed that all I/O suffers on the KNL processors, due to the poor serial performace of these CPUs compared to Haswell.  While the milestone was a considerable amount of work for me, it was a good experience because it gave me a chance to see how complex codes grow over time. It was hard to deal with all the changes, but once we inserted an API beachhead for our code it was easier to make progress. It was thrilling to see our code actually work and do the things it was supposed to do, and we received postive feedback from the review committee for targeting the real hardware. I'll spend a good bit of next year revamping some of our code and pushing it so we can do more analysis operations. Publications

| FAODEL Overview PaperPatrick, Scott, and Jay put together an overview paper about what we're doing in FAODEL. It was presented at SIGARCH/SIGHPC's ScienceCloud18 workshop.  AbstractComposition of computational science applications, whether into ad hoc pipelines for analysis of simulation data or into well-defined and repeatable workflows, is becoming commonplace. In order to scale well as projected system and data sizes increase, developers will have to address a number of looming challenges. Increased contention for parallel filesystem bandwidth, accomodating in situ and ex situ processing, and the advent of decentralized programming models will all complicate application composition for next-generation systems. In this paper, we introduce a set of data services, Faodel, which provide scalable data management for workflows and composed applications. Faodel allows workflow components to directly and efficiently exchange data in semantically appropriate forms, rather than those dictated by the storage hierarchy or programming model in use. We describe the architecture of Faodel and present preliminary performance results demonstrating its potential for scalability in workflow scenarios. Publications

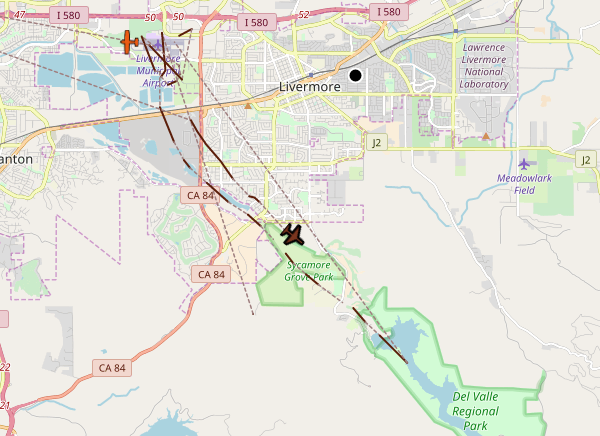

| Blimp Tracking SuccessA little over a week ago a friend of mine that knows I track planes called me up to tell me he saw the Goodyear Blimp flying over Livermore. When I got home I went to my Pi-aware flight tracker to see if I could spot anything. Nope- nothing was on the current map and my logs didn't have any hits for the ICAO numbers Goodyear has registered with the FAA (their blimps have tail fins N1A to N7A). While I was disappointed, I wasn't too surprised- the one time I did see a blimp on the tracker it wasn't providing position info. I figured the one my friend saw had already landed, and that my logs weren't observing it because I only record planes with positions.  I had the following Friday off so I took some time to poke around a little more. I found someone had posted a video on youtube showing blimp N2A landing in Livermore earlier in the week. That helped me figure out which ICAO id to look for (N2A is A18D51). Amy called to let me know that she'd spotted it while she was driving to Dublin. I checked the tracker again and got a nice surprise- in addition to picking up its transmissions, there were enough pi-aware users in the area to determine its location via MLAT. So far the tracker always reports the blimp's altitude as being "on ground". Flight Aware says its somewhere between 1000 and 2000 feet. I've read that blimps are hard to track because the low altitude makes it difficult to get enough stations with line of sight to do the MLAT. I don't get much range with it- it disappears once it's out around Dublin.  I don't have a good idea of what it's doing out here. Usually the blimp comes out here for sporting events. The Warriors/Cavs championship games started this week, but they're in a closed coliseum. I guess the Giants and A's also have some home games this week. It's definitely been hanging around Livermore a lot though. On the way home from lunch today we stopped at the Livermore airport and watched it land, swap out people, and then take off again. From the tracks I captured it looks like they made a few trips out to Lake Del Valle and back. It was 90 today, so there were probably a lot of people out there cooling it off. It's funny having a giant blimp hanging out in our little town for so long. It's like a giant puppy wandering all over the place. |