As a follow on to our first MCM paper, we built a tool called IMPACT to make it easier for chip designers to explore different packaging options early on in the design process. We were invited to add to our conference paper and improve it for an IEEE Transactions on Components, Packaging, and Manufacturing Technology journal article.

Abstract

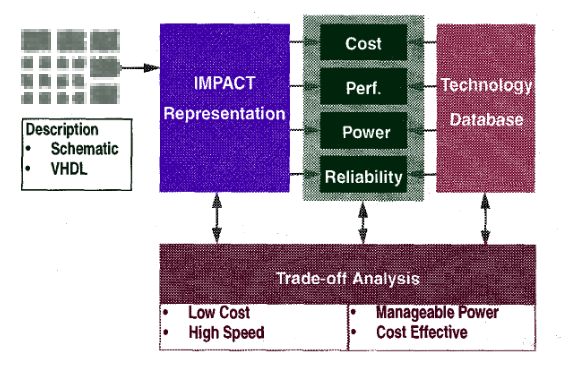

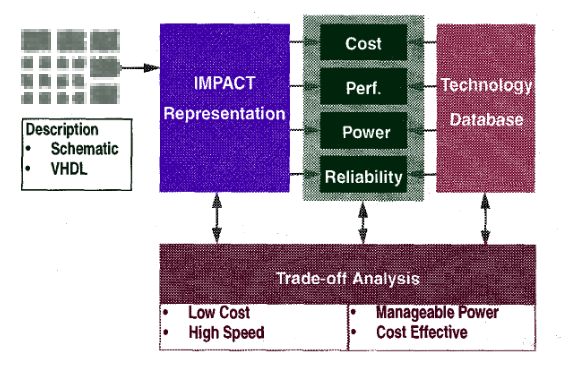

This paper explores early analysis of the complex relationships between system architectures and the active and packaging materials from which they are implemented. The goals of this analysis are to enable the designer to specify cost effective technologies for a particular system, and to uncover resources which may be exploited to increase performance of such a system, early in the design process. We describe a prototype tool called IMPACT, which will predict cost, performance, power, and reliability, and present several case studies demonstrating its use.

Publications

- ICISS Paper Vivek Garg, Darrell Stogner, Craig Ulmer, D. Scott Wills, and Sudhakar Yalamanchili, "Early Analysis of Cost/Performance Trade-Offs in MCM Systems", IEEE International Conference on Innovative Systems in Silicon, 1996.

- Journal Paper Vivek Garg, Darrell Stogner, Craig Ulmer, David E. Schimmel, Chryssa Dislis, Sudhakar Yalamanchili, D. Scott Wills, "Early Analysis of Cost/Performance Trade-oFfs in MCM Systems", IEEE Transactions on Components, Packaging, and Manufacturing Technology: Part B, Vol. 20 , Iss. 3, Aug 1997.

During my master degree I did predictive modeling and simulation for Georgia Tech's Packaging Research Center (PRC). For this work we combined several EE models for circuits and packages to estimate when it would make sense to use multi-chip module (MCM) packaging instead of building large monolithic dies. In this paper we made the case for considering packaging choices early on in the design process so that designers could make better choices.

Abstract

Computer system design addresses the optimization of metrics such as cost, performance, power, and reliability in the presence of physical constraints. The advent of large area, low cost Multi-Chip Modules (MCM) will lead to a new class of optimal system designs. This paper explores the early analysis of the impact of packaging technology on this design process. Our goal is to develop a suite of tools to evaluate computing system architectures under the constraints of various technologies. The design of the memory hierarchy in high speed microprocessors is used to explore the nature and type of trade-offs that can be made during the conceptual design of computing systems

Publications

- EDTC Paper Vivek Garg, Steve Lacy, David Schimmel, Darrell Stogner, Craig Ulmer, D. Scott Wills, and Sudhakar Yalamanchili, "Incorporating Multi-Chip Module Packaging Constraints into System Design", European Design and Test Conference, 1996.

In early 1996 the Wall Street Journal did a piece on a class project that I'd worked on with four other students. They didn't name us, but it was pretty fun to be 23 and talking to a journalist from the WSJ:

The project was for a senior-level Real-Time DSP class I took in the Fall. My project team worked on modernizing a system that GTRI had developed to detect a person's heartbeat and respiration rate using a radar device. It was a fun project that highlighted how signal processing is often an art as much as it is a science.

An Idea for the Olympics

I worked for Dr. Schafer and Dr. McClellan as an undergrad and developed a few Matlab programs they could use in lab exercises for the intro to discrete signal process (DSP) class. One day after meeting with Dr. Schafer he told me that there was a unique opportunity coming up that I could be a part of if I signed up for a senior-level DSP class that was being offered in the Fall. The 1996 Summer Olympics in Atlanta were only a half year away, and the whole city was buzzing with Olympic thoughts. One of the DSP professors saw a news story about how world-class archer are so focused on stability when they're aiming that they time the release of an arrow with their heartbeat so that their aim isn't disturbed by the heart's movements. The DSP professors thought it would be interesting to see if we could rig up a device that could monitor an archer's heartbeat to test out the story, and do so without attaching anything to the archer that would disturb him or her.

As it turned out, someone at GTRI had previously built an analog system for detecting human vital signs as part of a military contract. The idea was that the device would be attached to a military truck so that soldiers could drive around a battle field, point the device at bodies in the field, and use it to detect which people were alive and could be rescued. The GTRI device was completely analog and used a radar device to provide the input signal to an analog filter that looked for a heartbeat signature in the frequency domain. The GTRI people told us the system had been successful enough to fulfill the contact, but hadn't made it out of the prototype stage for a number of reasons (size, analog reliability, and latency). They were happy to let us use the radar section of the project though, provided we worked with them if anything came out of the project.

Class Project

I signed up for the real-time DSP class and met four other students that were picked to work on the vital signs project. The class covered a number of practical issues to building real-time systems using current (TI) DSP chips. The professor was a founder of a company that built DSP products for others, and loaned each project team an EISA DSP card and software that could be used to implement the projects. In addition to having good hardware (modern TI DSP, memory, ADC, and DAC), the board came with several software examples that demonstrated how to continuously move data from ADC to DSP to DAC. As someone who was used to waiting on Matlab, it was thrilling to watch the board do things in real time. Testing was also interesting. The way you debugged an FFT was by hooking up a frequency generator to the input and an oscilloscope to the output. Seeing the stem move around as you adjusted the frequency was pretty exciting.

The project team started off by going over to GTRI and recording some initial radar data. We had one of our guys sit in a chair and breathe heavily for thirty seconds while the rest of aimed a radar horn at him and collected data on a PC. The team split up into two teams. The analysis team worked through the signal processing and dug around the data in Matlab. The implementation team focused on practical concerns of getting algorithms running on the hardware. I worked on the implementation team, as the hardware was the greater unknown. While there was a lot of TI reference code we could use, it was all in assembly and took a good bit of tinkering to glue together correctly.

It was difficult for us to detect respiration (chest moving in and out) and a heartbeat because the physical movements were small (precision issues) and at a low frequency (latency issues). A big part of our work involved a massive amount of (filtered) downsampling to get to the frequencies we needed. It seemed pretty crazy to me that we were recursively gathering thousands of samples only to distill it down to a singe value.

Mixed Results

The signal processing team struggled to find meaning in the data we captured, even after we went back and made additional runs. Respiration could be seen, but signals in the heartbeat frequencies were extremely faint. I believe the analysis team did have some better luck when they focused on harmonics, but none of us felt that our initial signal had enough fidelity for us to feel confident in the result. The implementation team got a design together that did all the downsampling and detection in real time. However, we were all pretty disappointed that we couldn't find a smoking gun.

Even though the results weren't what we wanted, the project was a good experience for me because we took an idea and built a practical implementation in a limited amount of time using the resource we had available. I enjoyed getting my hands dirty with real hardware, even though it meant wrestling with a lot of low-level software that wasn't in my scope. A few years after we did this work, someone else from Georgia Tech picked up the work and finished the effort. In retrospect it would have made a nice starting point for a masters thesis, but by the Olympics I was doing more in CompE than DSP at school.

For my undergraduate senior design project, Darrell Stogner and I designed and built a systolic processor array that used multiple processing elements to accelerate matrix and vector operations. In addition to simulating the design, we adapted an assembler to our ISA and built assembly code to demonstrate that it could process multiple types of data flows. While the design was too large to fit in the school's Zycad hardware emulator box, we were able to map, partition, and test portions of the design in FPGA hardware.

The architectures for (a) the overall system and (b) the individual cell

GaTech's New CompE 4500/4510 Senior Design Class

Midway through my undergraduate CompE degree, Georgia Tech did a complete overhaul of the CompE curriculum. While it would have been shorter to graduate under the old program, I chose to switch over to the new curriculum because the classes covered a broader range of material. One of the requirements in the new program was that all students had to take a two-quarter, senior design class. The course catalog described this series as a "Capstone design experience for computer engineering majors. Design a processor and associated instruction. Testing via simulation models." I signed up for the first offering of CompE4500, which was taught by Dr. Sudhakar Yalamanchili. The class only had about 15 students in it, since there weren't many CompE's at the tail end of the curriculum yet. Sudha was incredibly encouraging and told us that the point of this class was to design a new processor architecture and build all the support software necessary to bring it to life. He would teach us how to design hardware in VHDL, debug the design with EDA simulators, customize an assembler to work with our ISAs, and synthesize the hardware to run in an FPGA-based emulation platform from Zycad. Sudha asked the class to split into teams of two or three, and then scheduled weekly meetings with him to discuss how projects were progressing. Darrell and I knew each other from previous DSP classes, and teamed up without any real ideas of what we should build for the class.

Systolic Processor Arrays

When we asked Sudha for project ideas, he sent us home with some research papers about 2D systolic processor arrays that people had built for image processing. While the papers were a little bit beyond our reading level, they helped us understand that researchers had constructed systolic processor arrays as a way to maximize concurrency in complex dataflows. The idea is that you design a simple processing element (PE) with fixed routing connections that make it easy to tile out the cores in a large grid. After loading a program into the PE, you stream data into and out of the edges of the array. Each PE does a little bit of work on the data as it is pumped through the system. Sensing our concern about getting a design up and running by the end of the course, Sudha suggested that we focus on a 1D design that could implement matrix multiplication and convolution. Darrell and I picked the name "elRoy" because it sounded like something from the space age. We picked funny caps to make it sound edgy.

For the PE part of the design we sketched out an architecture that included one multiplier, one adder, a few registers, and a data path that could be adjusted at run time by software. Realizing that multipliers were expensive and that our operators sometimes had zeros in them, we inserted a configurable-depth fifo in the data flow to allow data to simply bubble through on coefficients that were zero. Next, we tiled several PE's together and created buss logic to route data and control signals into the array. Finally, we had to design a general-purpose processor that would allow us to use software to control the flow of data into and out of the array. The processor was a bad design (ie, no pipelining, minimal ops), but it was good enough we could run basic problems that we wrote in assembly. It was sheer joy the first time I compiled an assembly program, hard coded the program into a RAM simulation module, and saw the data bubbling through all the monitoring points in my simulation.

EDA Hassles

The original goal for the class was to design/simulate in the first quarter and then run synthesize/place in an emulation box the second quarter. Unfortunately, the class got hit by numerous EDA problems midway through. We were using Synopsis to synthesize the designs, but the license unexpectedly expired and didn't get renewed until later in the second quarter. Similarly, the place and route tools for the emulation box weren't fully baked, as nobody had really figured out how to handle designs that had to be sliced into multiple FPGAs at that time. We were skeptical about the tools up front, so we spent a lot of time minimizing the amount of work the synthesis tools would have to do. We basically converted our VHDL design into gate-level components as much as possible, leaving only the multiplier for synthesis. When the emulator box started working again, we discovered it just didn't have the capacity to store multiple PEs. Thus, we focused on doing piece-wise demonstrations where we could test out individual components (eg, a multiplier) on the hardware.

Later CompE 4500 classes backed away from designing exotic architectures and instead focused on building traditional CPU designs the whole way through. My friends did a lot of hard things (eg, superscalar, Booth's algorithm) in RTL and got their designs PAR'd on the emulator box. I'm happy though that my class was given some room to try out new ideas.

Reports

The following are the reports we wrote at the end of the first and final quarters:

- Q1 Progress Report: Darrell Stogner and Craig Ulmer, "elRoy: A Systolic Processor Array" Fall 1994 Report

- Q2 Final Report: Darrell Stogner and Craig Ulmer, "elRoy: A Systolic Processor Array", Winter 1995 Report

0000-00-00 Mon

disclaimer

Views and opinions expressed in this website are my own and do not necessarily represent the views of my current or former employers, the US Department of Energy, or the United States Government.

Papers and slides presented in the publications portion of this website have gone through a review and approval process to ensure the work can be shared with the public. In the case of my most recent employer, an "Unclassified Unlimited Release" marking signifies that a derivative classifier and a knowledgeable manager have both reviewed the material and approved it for unlimited distribution.

The majority of these publications are available at the DOE Office of Scientific and Technical Information's public archive. Simply search for Craig Ulmer at OSTI.gov.