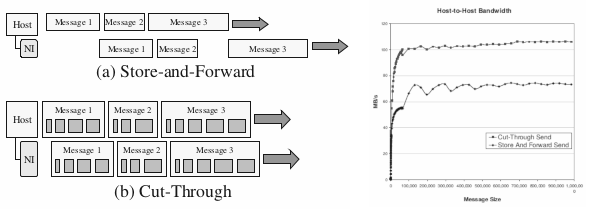

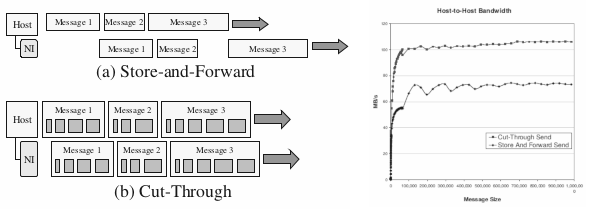

This paper I wrote for the first Myrinet User Group meeting provides an example of how GRIM interacts with an I2O peripheral device. It also goes into the details of how the NI uses some cut-through optimizations to boost the bandwidth for sends in the system.

Abstract

Resource rich clusters are an emerging category of clusters of workstations where cluster nodes comprise of modern CPUs as well as high-performance peripheral devices such as intelligent I/O interfaces, active disks, and capture devices that directly access the network. These clusters target specific applications such as digital libraries, web servers, and multimedia kiosks. We argue that such clusters benefit from a re-examination of the design of the message layer to retain high performance communication while facilitating the interface to endpoints for a variety of devices.

This paper describes a message layer design which includes optimistic flow control, the use of logical channels, a push-style cut-through injection optimization, and an API supporting cluster-wide active message handler management. The goal is to support a number of diverse cluster hardware configurations where communication endpoints exist in a variety of locations within a node. The current implementation has been tested on a Myrinet cluster with communication endpoints located in the host CPUs as well as Intel i960 based I2O server cards.

Publications

- MUG Paper Craig Ulmer and Sudhakar Yalamanchil, "A Messaging Layer for Heterogeneous Endpoints in Resource-Rich Clusters", Myrinet User Group.

2000-08-01 Tue

wsn data pub

The initial three years of my PhD were funded through a NASA Graduate Student Research Program fellowship. One of the benefits of this program was that they encouraged students to come out during the summers to learn more about the problems that NASA faces. I spent my 1999 and 2000 summers in Pasadena, working in the Center for Integrated Space Microsystems. While I initially had plans to evaluate how well my GRIM software worked with one of their clusters, my center director asked if I'd be interested in trying something a little different. He told me that the recent success with the Sojourner rover had sparked a great deal of interest in deploying more in situ sensors on Mars. He challenged me to think about engineering problems NASA would face if it were to cast hundreds to thousands of wireless sensor nodes across Mars.

It was very different than the PhD topic I had been studying, but it was too interesting to pass up. I dove into the papers to learn what people had been doing with wireless sensor networks on Earth. As a networking person, the part that interested me the most was figuring out how a collection of low power, low performance sensor nodes would boot up, establish a routable network, and then collect meaningful information over a geographic region. There were many examples to draw from on Earth, including battlefield sensors, buoy networks, and arctic tumbleweed sensors. My director introduced me to people from all over the lab to learn more about how NASA builds resilient embedded systems that are designed to survive being dropped out of the atmosphere into an environment with harsh thermal constraints.

While the NASA summers took me off course into a side topic that delayed my graduation, it was one of the best things I did during my academic career because it encouraged me to think about hard problems that were outside of my comfort zone. I wrote up the below technical report summarizing some of the things I learned, though I never got it officially entered for a report number at JPL or Georgia Tech. I put the paper up on my school web page, which would up getting referenced more than I would have thought.

Publications

- Summer Report Craig Ulmer, Sudhakar Yalamanchili, and Leon Alkalai "Wireless Distributed Sensor Networks for In-situ Exploration of Mars". NASA JPL Summer Internship Report.

This is the first paper where I talk about the General-purpose Reliable In-order Messages (GRIM) communication layer that I've been developing for Myrinet. My idea is that we should be building communication libraries that can route data between host processors and remote peripheral devices that are distributed throughout the cluster. We're calling these types of systems "resource rich clusters" because they have more computing resources than other types of systems. The current version of GRIM offloads a lot of the message layer management responsibilities to the network interface, which simplifies the amount of effort required for peripheral devices to communicate in the platform.

Abstract

Resource rich clusters are an emerging category of computational platform where cluster nodes have both CPUs as well as high-performance I/O cards. These clusters target specific applications such as digital libraries, web servers, and multimedia kiosks. The presence of communication endpoints at locations other than the host CPU requires a re-examination of how middleware for these clusters should be constructed.

A key issue of middleware design is the management of flow control for the reliable delivery of messages. We propose using a network interface based optimistic flow control scheme to address resource rich cluster requirements. We implement this functionality with a message layer called GRIM, and compare its general performance to other well-known message layers. This implementation suggests that the necessary middleware functionality can not only be constructed efficiently, but also in a way that provides additional middleware benefits.

Publications

- PDPTA Paper Craig Ulmer and Sudhakar Yalamanchili, "An Extensible Message Layer for High-Performance Clusters". Parallel and Distributed Processing Techniques and Applications.

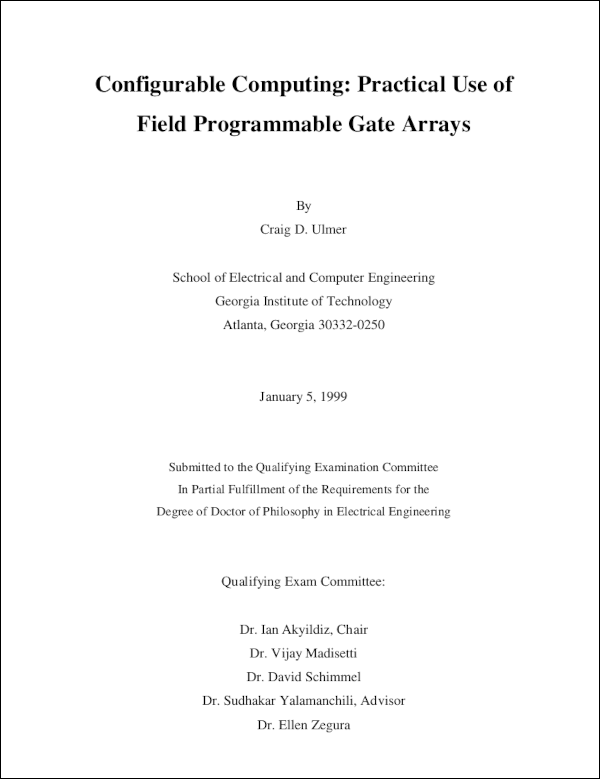

After passing the prelimary exams to enter the Ph.D. program at Georgia Tech, the next major step is passing the qualifying exam. The purpose of the qualifying exam is to show that a student can walk into a new topic, learn everything they can about it, write a reasonable summary of the current state of the art in that topic, and present the material to a committee, all within one month's time. I picked a committee of people that I knew were tough but fair and hoped the best in topics. I was relieved when they asked me to focus my attention on using FPGAs to enable a field of computing called configurable computing.

I spent a coniderable amount of time that month downloading papers and looking up conference proceedings in the library. While I'd worked with FPGAs some during my masters degree, I didn't know the ideas went all the way back to the 1960's (I actually found Gerald Estrin's fixed+variable paper in print at the library). I learned a good bit about the commercial chip families and marveled at some of the ideas people were talking about with custom computing machines. There's nothing better than having an excuse to set aside to learn all you can about an interesting subject. It took a bit of work to get a handle on how to summarize the material I'd covered and put it into a presentable form that had a hard page limit.

My review committee included three computer architecture professors and two network professors that had a background in hardware. Of the five, Vijay was the only professor that worried me. I'd taken a rapid prototyping class with him earlier and had seen him rip into a few students when we had to take turns presenting other peoples' research papers. Fortunately, I realized before it was my turn to do a class presentation that he was only vicious because he wanted to get to the truth of an idea. When he raised issues during my class presentation, I defended the topic and didn't back down, which I think he respected. However, I wasn't sure how well this confidence would carry me in my own exam.

In the end things went pretty well. Dave probed me on some parallelism questions, which ironically Vijay answered for me thinking Dave simply didn't understand the material. They sifted through the cookies I'd brought, asked me to leave for the closed discussion, invited me back in, and then told me I'd passed like that. It was a huge relief and a boost to my confidence.

Publications

- Qualifying Exam Craig Ulmer, "Configurable Computing: Practical Use of Field Programmable Gate Arrays".

Presentation

1997-11-10 Mon

dsp code pub

During my (first) senior year as an undergrad at Georgia Tech, the ECE department implemented a large curriculum change for Computer Engineers. One of the big changes for me was that the digital signal processing (DSP) group pushed to get an intro to signal processing class taught at the beginning of the program instead of as an elective. The idea was that they wanted to show new students what they could do with DSP so they'd have a motivation to continue through the (often boring) math and circuit courses and graduate. While I could have just followed the old curriculum to graduate, I signed up for the first class of EE2200, taught by Ron Schafer. It was the best class I took as an undergrad, as it opened up my eyes to how all the math and circuitry work I'd done in other classes could be used to do something fun.

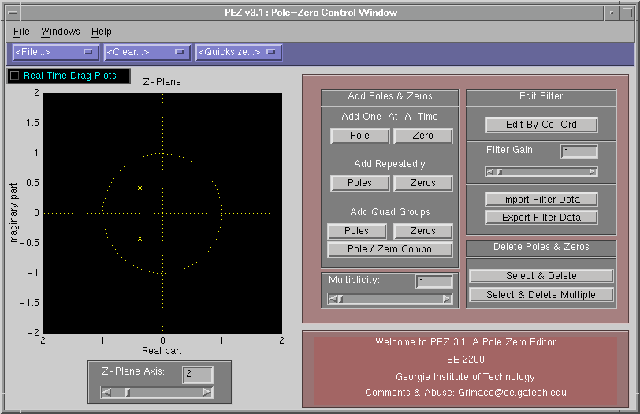

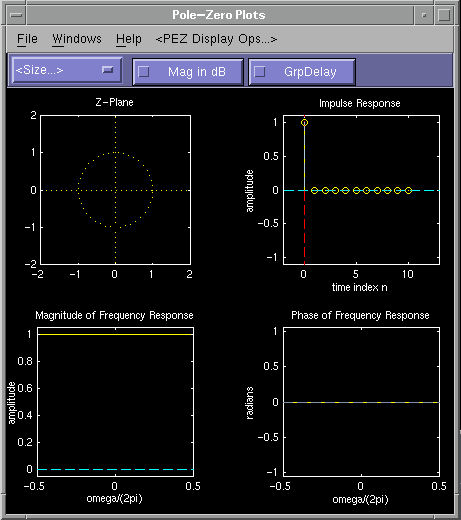

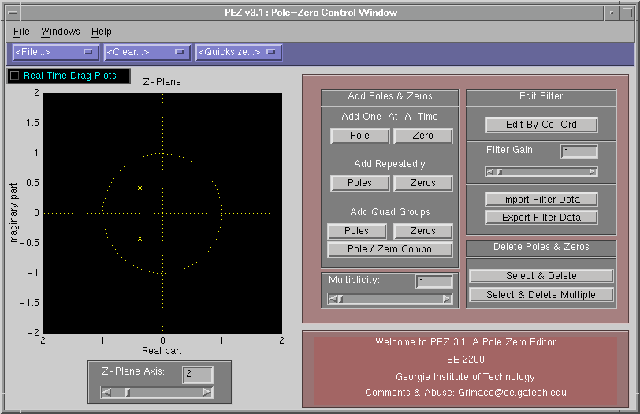

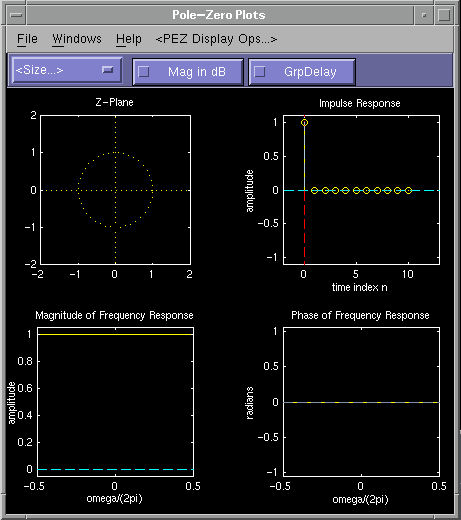

After a follow on course in analog signal processing, I asked Dr. Schafer if there were any self-study projects I could do to learn more before I took the senior DSP electives. He mentioned that there had been a lot of improvements in Mathwork's Matlab software recently, and the department was interested in figuring out whether they could use its new GUI features to help build more demos that could be used to teach DSP. I signed up and started learning how to use the GUI commands to build simple demos. The first few programs were pretty trivial, simply providing sliders and edit boxes so people could more easily change paramterers to see some DSP concepts visually. Then, Dr. Schafer asked me to write a simple Pole-Zero editor that would allow people to graphically move a filter's poles and zeros around in the Z-plane and see the responses. After a lot of work I had a program called PeZ. Dr. Schafer was pleased with it (after he corrected some spelling mistakes!), showed it to Dr. McClellan, and then we pushed it out to the Matlab mailing list. People were enthusiastic about it, so I continued improving it. Eventually Schafer and McClellan wrote a book called DSP First that captured the teachings and demos of EE2200. They included PeZ on a CD that came with the book and had some text about how to use it in the book.

Writing a Pole/Zero Editor

Initially PeZ started out as something simple: we just wanted a panel where a user could click on the Z-plane to add/remove poles and zeros. After updates were made another window showing the response functions would update. This functionality was pretty useful by itself- when explaining things to students you graphically show how delay got introduced in the impulse response, or the system blew up when you put a pole outside the unit circle.

It didn't take long for people to start asking for new features. I had to find a way to deal with multiplicity (putting multiple poles at the same place). People wanted an edit panel so they could look at and edit the exact coordinates of a pole/zero. McClellan pushed for an option to add pairs of poles/zeros at a time (symmetric to the xaxis, or at inverse distances from the unit circle). People wanted a better way to print their figures (someone else wrote a nice printer option menu and allowed me to use it).

The big feature though was being able to drag poles/zeros around and see the response plots change in real time. Adding the basic drag functionality was hard- Matlab didn't provide good GUI tools at the time so I had to do a lot of low-level GUI operations to figure out when someone was clicking on a pole/zero, moving it around, and then letting go of the mouse button. The system worked, but all the calculations made the whole thing clunky, with updates happening about once a second. I did a good bit of work to improve things on our UNIX workstations, but for some reason the Windows version of Matlab really lagged behind. Fortunately, Dr. Yoder at Rose-Hulman had a motivated student named Brad North hack on it and fix it. Brad did some crazy optimizations with how things were refreshed and made huge improvements for all the versions.

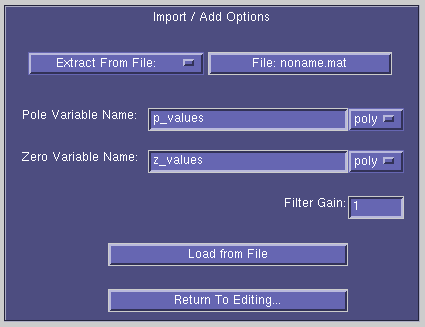

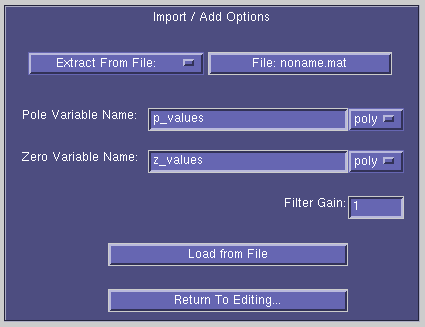

One of the last cool features I added was the ability to import data from other Matlab sources. I made a special interface to talk with Mathwork's awesome filtdemo tool, which allowed you to generate common filters in a parameterized way. You could save and load values from a file. Also, you could pull in data from the existing Matlab environment. This import was useful because it meant you could build your own filter tools/functions and then pass the filter parameters into PeZ.

Matlab's Amazing Portability

One of the amazing things about coding up the GUIs in Matlab was that it (potentially) made my code usable on a large number of OSs and platforms. Matlab served as a mini-os in a sense, normalizing differences in GUIs between different OSs. I did almost all of my work on HP Apollo workstations in the CoC, but the GUIs (eventually) worked on Windows, Mac, and Sun. This portability blew our minds when we started- you have to remember that Java didn't exist at that time and most GUI work meant a lot of X hacking. Matlab definitely did have cross-platform compatibility problems (especially Windows). However, I was always able to hack around most of these problems and provide one program that ran everywhere. Mathworks did well when they jumped from version 4 to 5- it didn't take much work to get PeZ running because they continued to support their legacy APIs.

In retrospect, the only way I made it through PeZ's initial development was by not knowing how horrendous it really was. The GUI code was extremely difficult- there weren't any editors at the time and you had to manually define all the coordinates of every object. Whenever a prof asked for a new button, I had to go back and redefine the coordinates of every GUI object. Matlab 4 was missing some key things: you could only put one function in a file and they didn't have data structures (beyond matrices). That meant you had to find clever ways to push information around between functions (for speed, I used lots of globals). The worst thing though was that I embedded script commands into the callback operation of every button. These scripts had to be encoded as strings, so they often had a lot of escape sequences in them so I could do things like put a tic inside of a tic.

Global Users

Back when I first started PeZ the Web was new and we simply didn't have the devtools we have now (e.g., not Github. Nor git for that matter). After PeZ was in a good enough state to release, I made a posting to the Matlab news group about it. A lot of random people emailed me about it to ask questions and give feedback. I eventually put up an awful-looking webpage off my Ga Tech account that provided download links and gave more info on how to use it. At one point I had a map showing all the different countries where PeZ had been used.

Schafer, McClellan, and Yoder organized a group of students to help put together some tutorials and multimedia demos that could be used to supplement a new book they were writing for their EE2200 class. We had a lot of good discussions about cool things that could go on the CD- at the time Java was available and provided a better place for hosting software since you only needed a browser to run it (Matlab was still $100 for a student). We talked about doing a Java port, but decided against it because the work looked supstantial and I was moving on to other things. The team put together a great CD of things- I was happy to get PeZ on it and be included in a book that was used by so many students.

Publications

- DSP First Book CD James McClellan, Ronald Schafer, and Mark Yoder, DSP First: A Multimedia Approach, Prentice Hall, ISBN-13: 978-0132431712, January 1998.

Code