2018-06-11 Mon

faodel pub

We published an unclassified unlimited release (UUR) paper.

Abstract

Composition of computational science applications, whether into ad hoc pipelines for analysis of simulation data or into well-defined and repeatable workflows, is becoming commonplace. In order to scale well as projected system and data sizes increase, developers will have to address a number of looming challenges. Increased contention for parallel filesystem bandwidth, accomodating in situ and ex situ processing, and the advent of decentralized programming models will all complicate application composition for next-generation systems. In this paper, we introduce a set of data services, Faodel, which provide scalable data management for workflows and composed applications. Faodel allows workflow components to directly and efficiently exchange data in semantically appropriate forms, rather than those dictated by the storage hierarchy or programming model in use. We describe the architecture of Faodel and present preliminary performance results demonstrating its potential for scalability in workflow scenarios.

Publications

- IWAC Paper Craig Ulmer, Shyamali Mukherjee, Gary Templet, Scott Levy, Jay Lofstead, Patrick Widener, Todd Kordenbrock, and Margaret Lawson, "Faodel: Data Management for Next-Generation Application Workflows", ScienceCloud'18, June 2018.

we received DOE approval to relase version 1.1803.1 ("Cachet") of faodel on github.

Code

The code is now hosted at github:

2018-02-05 Mon

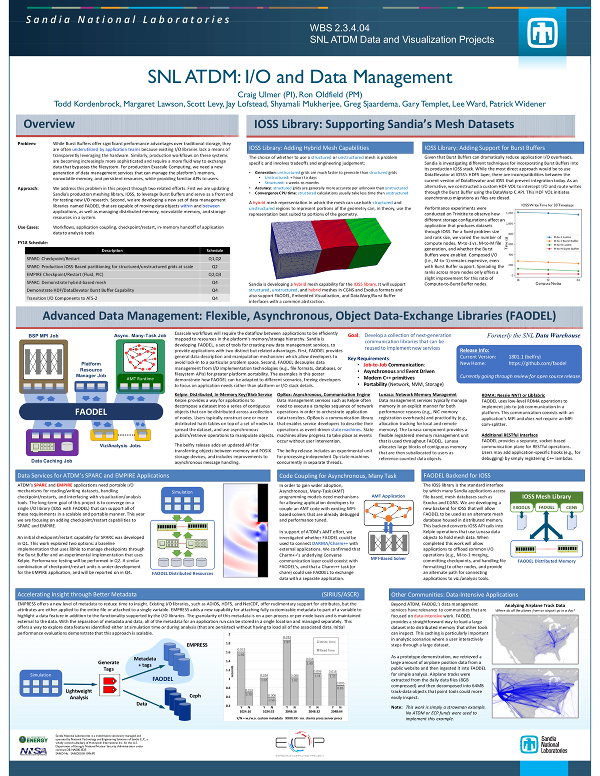

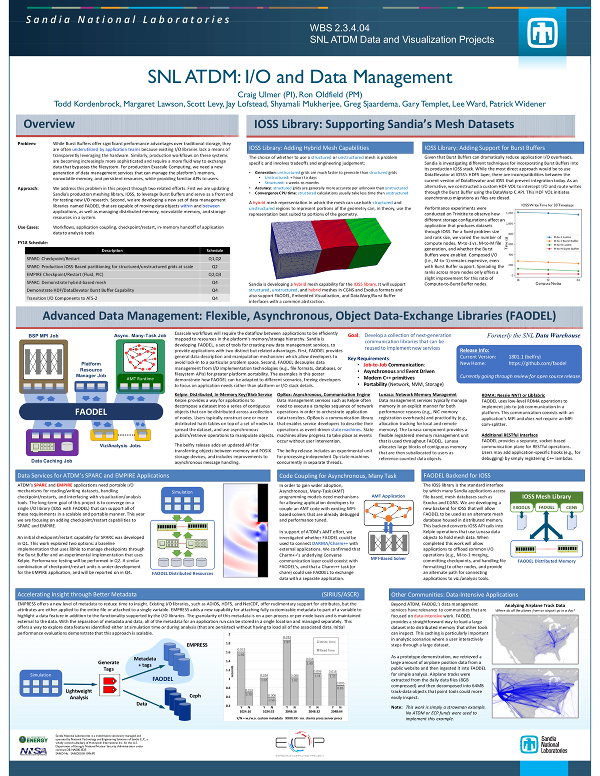

net systems pub

We presented an unclassified unlimited release (UUR) poster.

Poster

It's official: I'm renaming my main project at work to FAODEL: Flexible, asynchronous, data-object exchange libraries. FAODEL (pronounced fay-oh-dell) comes from a simplification of the Gaelic term faodhail, which is a land bridge used to cross between islands. Here are two examples between the Monach Islands in Scotland:

What's a faodhail? WikiSource says:

faodhail, ford, a narrow channel fordable at low water, a hollow in the sand

retaining tide water: from N. vaðill, a shallow, a place where

straits can be crossed, Shet vaadle, Eng. wade.

2018-01-01 Mon

faodel io hpc pub

We published an unclassified unlimited release (UUR) report.

Abstract

Recent high-performance computing (HPC) platforms such as the Trinity Advanced Technology System (ATS-1) feature burst buffer resources that can have a dramatic impact on an application's I/O performance. While these non-volatile memory (NVM) resources provide a new tier in the storage hierarchy, developers must find the right way to incorporate the technology into their applications in order to reap the benefits. Similar to other laboratories, Sandia is actively investigating ways in which these resources can be incorporated into our existing libraries and workflows without burdening our application developers with excessive, platform-specific details. This FY18Q1 milestone summaries our progress in adapting the Sandia Parallel Aerodynamics and Reentry Code (SPARC) in Sandia's ATDM program to leverage Trinity's burst buffers for checkpoint/restart operations. We investigated four different approaches with varying tradeoffs in this work: (1) simply updating job script to use stage-in/stage out burst buffer directives, (2) modifying SPARC to use LANL's hierarchical I/O (HIO) library to store/retrieve checkpoints, (3) updating Sandia's IOSS library to incorporate the burst buffer in all meshing I/O operations, and (4) modifying SPARC to use our Kelpie distributed memory library to store/retrieve checkpoints. Team members were successful in generating initial implementation for all four approaches, but were unable to obtain performance numbers in time for this report (reasons: initial problem sizes were not large enough to stress I/O, and SPARC refactor will require changes to our code). When we presented our work to the SPARC team, they expressed the most interest in the second and third approaches. The HIO work was favored because it is lightweight, unobtrusive, and should be portable to ATS-2. The IOSS work is seen as a long-term solution, and is favored because all I/O work (including checkpoints) can be deferred to a single library.

Publications

- ECP Report Ron Oldfield, Craig Ulmer, Patrick Widener, and Lee Ward, "SPARC: Demonstrate burst-buffer-based checkpoint/restart on ATS-1", SAND2018-0299R.